How to Make Off Grid Data Centers Affordable

Powering data centers with solar and batteries can be compelling with optimized designs.

The Prompt

Data center operators are concerned that their rapidly growing electricity demand is outrunning electric utilities' ability to connect and power them. Potential solutions include utility/permitting reform, nuclear, geothermal, and even off-grid solar with batteries. Casey Handmer overviewed off-grid solar + battery systems as a solution on his blog.

I was curious how it might work and used Sandia Lab's pv-lib modeling package to look at specific requirements for different locations. The model is not investment grade, but provides rough estimates. There will also be speculation on how solar-powered data centers might evolve. A hypothetical 100-megawatt data center is the basis for all assumptions. The modeling code is in the appendix.

Solar Panels and Lithium Ion Batteries Only

First is a look at powering a facility at 100% reliability without any alternative generation. The solar array must be able to power the data center and fill the batteries on the gloomiest winter day, leading to significant excess capacity. A lithium iron phosphate (LFP) battery bank will have 18 hours of discharge capacity to get through the night but no excess because it is the most expensive component. There is massive variation between locations, and the results would be worse if the weather model ran for more than one year.

CitySolar (MW)Tuscon, AZ1477El Paso, TX3017San Antonio, TX4216Yuma, AZ1773

The solar array sizes under these assumptions are prohibitively large.

Allowing Diesel Generators

The data needs center doesn't need any design changes if the solar and battery systems provide AC power like the grid. The data center would also retain its diesel generators.

The gloomy days are outliers in the US Southwest, so meeting part of the load on the worst days with the existing diesel generators reduces the required solar capacity. The optimal scenario is typically when the generators supply 1% of yearly electricity (shown below).

CitySolar (MW)Tuscon, AZ583El Paso, TX606San Antonio, TX851Yuma, AZ526

The reduction in system size also makes the total cost approachable. A system for a 100 MW data center in Yuma, AZ would cost ~$7,000 per kilowatt of data center power rating, using conservative assumptions and including the investment tax credit. That is more expensive than a natural gas plant but cheaper than the new Vogtle nuclear power plant. It is a good deal for a company that has made climate commitments that prevent it from building a combined cycle natural gas power plant and needs an independent power source in less than two years to keep up in the AI race.

Co-Optimizing Solar and Data Center Design

Off-grid data centers can have different designs than grid-powered ones, creating an opportunity for simplification. Efficiency is also critical because the solar + battery system is expensive. Global optimization across the system can make the off-grid concept more competitive.

Data Center Efficiency

There is substantial variance in data center energy performance. Lawrence Berkeley National Lab estimates that "standard" performers only use 40% of their energy on computers. The remaining usage is from fans, cooling unit compressors, pumps, power conversions, etc. Many operators have improved efficiency over time, and computers consume 90% of power at the best-performing facilities. The top tier tends to be the "hyper scalers" like Facebook, Google, Microsoft, and Amazon that can justify custom equipment orders and engineering efforts to optimize designs. Typical performers usually take standard equipment and plug it in with little forethought. Many efficiency increases come from deleting unnecessary equipment, and further improvements could be compatible with simplification and cost reduction.

Bringing the Heat (Removal)

The cooling systems in most data centers are energy hogs and contribute no direct value. The challenge is immense because every watt the servers consume becomes heat.

Energy-conscious data center operators can't use traditional air conditioning. The most efficient chillers have a coefficient of performance of 5, meaning 100 megawatts of servers would require an extra 20 megawatts for cooling under perfect conditions. Air distribution (fans) can consume more energy than the chillers.

Data center operators striving for efficiency have made several changes. They design their facilities to use "free" cooling. Free cooling means the temperature of the outside air or evaporatively-cooled water is cold enough to cool the inside air (and the servers). Most operators have increased the air temperature in the data center to facilitate this. And they've figured out clever rack arrangements that dramatically decrease the power required to move the cooling air. These methods are one of the key reasons the most efficient operators can reduce their non-IT energy usage below 10%.

AI servers bring new challenges because many require liquid cooling. Liquid cooling is much more effective but requires significant changes. Water plate cooling is the easiest to integrate into traditional data center design. Pumps move water past cooling plates on the back of each server to remove heat. These systems still require air cooling, so rack layouts must accommodate airflow, and costs will increase.

A "first principles" liquid cooling technology is immersion cooling. The computer boards sit in a tub of dielectric fluid (a fluid that does not conduct electricity) that removes heat from the servers. There are many different designs, but usually, a heat exchanger sits in the tub, and water flows through it to remove heat from the system. Some advantages are eliminating air cooling for the server load, moving racks closer together or making them larger, using less pumping energy than fans, and higher coolant temperature allowing "free" cooling in almost all climates. Cooling power draw for immersion systems is only a few percent of the server load.

Immersive cooling systems with traditional layouts save energy but not capital costs. One study finds that increasing rack density can reduce capital costs by cramming more servers in one rack. The technology has become very popular among Bitcoin miners, suggesting it lowers total expenses. It appears to be a good fit for an off-grid data center filled with hard-to-cool AI servers that need to minimize power draw.

The Direct Current Data Center

Solar panels, batteries, and computers operate on direct current (DC). Switching between direct current and alternating current adds cost and energy losses, and in the worst data centers, this can happen 5-6 times. The extra conversion equipment adds cost, takes up a lot of space, and lowers reliability. Moving the data center architecture to all direct current could solve these issues, and the industry has long been interested.

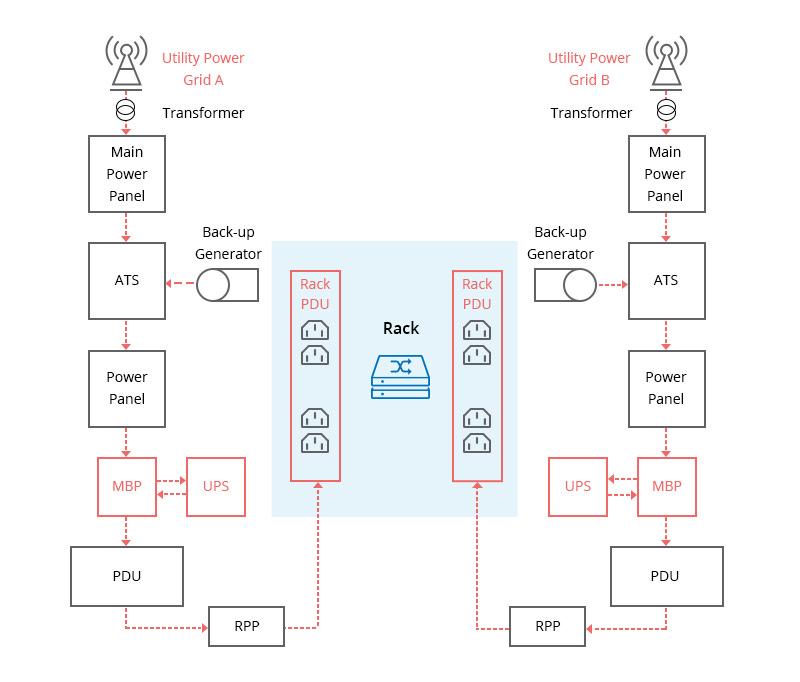

Traditional Data Center Power Distribution; ATS - automatic transfer switch, MBS - bypass, UPS - uninterruptible power supply, PDU - power distribution unit, RPP - Remote power panel; Source: FS

The history of DC data centers goes back over 100 years. All telecom exchanges had direct current architectures (and many still do!). The equipment had relatively low power consumption, and batteries were necessary to maintain reliability. 48V DC power distribution could run the equipment with backup batteries in the same circuit.

48V Telecom System; Source

The data center industry took notice of direct current architectures in the mid-2000s as facility size and power draw increased. Many claimed that switching to DC distribution would reduce space requirementsby as much as 25% and reduce power consumption by 5%-30%. ABB bought a startup and built several all-DC data centers around 2011. The concept died because switching entirely to DC had barriers, and leading data center operators made more incremental changes that captured most of the benefits.

The lion's share of benefit from switching to DC accrued at the rack and server level from eliminating individual power supplies and rack power distribution units (PDUs). Facebook and other companies began ordering servers that used 12V or 48V DC. The servers connect to a metal strip called a bus bar that runs down the rack carrying 12V or 48V DC. Before this, each server often had two power supplies that plugged into PDUs for redundancy, and they ran at low loads that were very inefficient. In the new concept, each rack has more compact and efficient centralized power supplies that feed the bus bar and operate closer to peak efficiency.

Another issue was centralized uninterruptible power supplies that convert AC to DC and then convert DC back to AC for distribution to the racks. Losses are ~5-10% of the power throughput. Companies moved to configurations that bypassed this conversion under normal conditions, decreasing losses to only 1-2%. Leaders now aspire to move from a centralized UPS to having batteries distributed in each rack. Adding a battery is simpler once a rack has a 12V or 48V DC bus bar and would be the next incremental step in eliminating conversion steps. The architecture for each rack would closely match that of the old telephone exchanges.

DC data centers became less compelling as the benefits diminished while all the challenges remained.

Off-Grid Paradigm Changes

Industry interest in direct current distribution has sparked again now that data centers might use DC power sources like solar, batteries, or fuel cells. Many DC concepts propose 380V DC as the distribution voltage, which these sources can produce directly, eliminating the transformer or rectifier that conditions the incoming power.

Eliminating the uninterruptible power supply system becomes another benefit. There is no reason to maintain a battery bank inside if there is a massive battery system outside. The architecture can collapse into a beautiful simplicity where 380V distribution wires run directly from the power source to racks, 380V is converted into 48V to feed rack bus bars, and individual servers hook into the 48V DC bus bars.

There are still challenges to overcome.

There aren't official standards for direct current data centers.

Few companies are willing to operate outside of set standards.

Arcs make direct current safety challenging.

The voltage, the driving force for arcs, is only briefly at the maximum value when alternating current arcs because it switches directions 50-60 times per second. Direct current arcs have the full voltage the entire time, and devices like DC switches are much bigger than AC switches to compensate for this. The higher voltage distribution wiring in data centers is often an exposed bus bar on the ceiling, increasing the danger of arcs.

380V DC might not be enough voltage.

AI training data centers have more power usage per rack, leading to high amperage and requiring thicker wires. Voltage needs to increase, but arc issues would become even more pronounced.

The concept is still promising, and many of these challenges are addressable.

Integrating the Off-Grid Data Center

Some improvements can happen regardless of the power source and are also the most critical to reducing non-IT energy usage:

Implement Immersion Cooling

AI servers drive extreme cooling loads and benefit from being close together to increase data transfer speeds. Immersion cooling helps with these issues immensely. It should be an easy choice if densification creates enough savings to reduce or eliminate the upfront premium.

Eliminate individual server power supplies in favor of rack bus bars.

Any company amid a giant data center build-out should be able to order units compatible with rack bus bars. The voltage should be at least 48V DC.

The opportunities uniquely available to solar + storage systems are simplifications that can reduce non-IT capital expenditure, add modularity, improve reliability, and decrease construction difficulty. Basic estimates for a fully equipped data center building minus the actual computers are around $10,000/kW. Even 10%-20% savings would substantially impact the relative competitiveness of powering the facility with solar + batteries.

Eliminate uninterruptible power supplies.

Virtually all data centers already have batteries, called uninterruptible power supplies (UPSs), that power the servers during power outages. They can run for 10-15 minutes and are necessary because diesel generators can't start fast enough to keep the servers up. The equipment can cost $500/kW before the extra power drain and space.

The solar + battery system is essentially an uninterruptible power supply. Its components are modular, allowing for designs that handle component failures gracefully. Deleting the UPSs could knock off ~$750/kW after considering equipment, installation, and square footage reduction.

Simplify and Uprate Distribution.

Voltage changes can be as costly as AC/DC conversions, and there should be as few as possible. Most solar developers are moving to 1500V DC, and many battery packs can operate at the same voltage. The logic follows that the distribution into the data center should also be 1500V. 380V DC would require an extra DC:DC conversion and will only be more constrained as data center and rack energy usage increase with AI scaling.

Traditional analog data centers have centralized power distribution systems. The power comes from the grid connection and often travels through a singular uninterruptible power supply bank before branching out to different racks. Centralization isn't necessary after deleting the UPS bank and the grid connection. Combining all the power flows only to redistribute them would introduce single-point failure potential, cause engineering problems at the bottleneck where the data center power is flowing through one line, and add cost and complexity from switches and gear needed to distribute the power properly.

A more logical architecture might be to divide the data center into power blocks served directly by a dedicated portion of the solar + battery array. The 100-megawatt facility might have ten 10-megawatt blocks served directly by a 1500V DC feeder line without connections to other blocks that would require extra switches and routing.

Improve High Voltage DC Safety.

Bus bars are very convenient, especially when a data center might have thousands of racks to connect. However, increasing voltage and using direct current increases safety risks for employees.

A straightforward strategy is to switch to single-point connectors and then try to minimize their cost. The obvious way to do this is to increase the power through each connection and reduce their number. AI server racks are rapidly upping their power usage, anyway.

A more aggressive strategy might be to immerse the high-voltage bus bar in the immersion cooling tub. A configuration like this would make more sense if the immersion tanks started looking more like the meat cases at Costco instead of the ones at Sprouts. Having the bus bars with a physical barrier and in a fluid with more dielectric strength than air could reduce arcing risks and improve safety.

Ensure Reliability.

The concept of reliability is turned on its head when moving from a centralized, analog architecture to a distributed one. There are no single-point failures. Each power block would have over 30 battery packs feeding it. They could be arranged in parallel with no central voltage regulation so that a single pack can't trip a block offline. It would be nearly impossible for the entire data center to go offline from a loss of power, and even individual blocks might have uptime that exceeds today's data centers.

Handling temporary degradation becomes paramount. Generators that feed into the battery banks are one option. But a robust design would gracefully handle one power block going offline, individual servers failing, and dropping utilization rates to adjust for a daily shortfall.

Eliminate other permitting roadblocks (generators and water usage).

Data centers paired with solar + storage are exciting for local governments because of the massive property tax bills they pay. Two common offsetting concerns are water usage and air/noise pollution. Addressing these deficiencies can increase the value proposition for local governments.

Evaporative cooling in cooling towers is one of the most efficient cooling methods in every metric except water usage. That can become an issue when data center cooling needs start approaching those of thermal power plants. Immersion liquid cooling solves the problem by allowing higher cooling water temperatures, which unlocks closed-loop water circulation cooled by air in almost every climate.

Diesel generators can lead to local permitting scrutiny because of noise and particulate emissions, especially as data centers grow. Another strategy is to throttle the data center on those 10-20 days a year when the solar + storage system is short. Data centers with customer-facing loads often run at low utilization and might be able to shift customers to data centers in other regions. Data centers focusing on training AIs run more consistently and would need to reduce their training speed. Installed generators cost ~$800/kW, and the data center capital cost, including servers, is ~$40,000/kW, so adding 1% more makeup compute capacity is money ahead of purchasing generators.

There could conservatively be $100-$200 million in capital cost savings through deleting uninterruptible power supplies, increasing distribution voltage, reducing switching and distribution complexity, and removing generators. Most importantly, the data center should be much smaller, modular, and easier to build over and over.

Assessing Solar and Battery Cost

The solar ($1000/kW AC) and battery ($350/kWh) cost estimates above are conservative for several reasons.

Solar AC capacity includes items an off-grid data center doesn't require. These include inverters, substations, transformers, AC switch gear, grid upgrades, engineering related to AC systems, and overhead from applying for interconnection. Modern solar farms typically have 20%-40% extra DC capacity, and AC cost models can have excess DC capacity. A rule of thumb is that DC capacity is 70% of the cost of AC capacity. The total might be closer to $550/kW after adjustments. A look at NREL's breakdown of utility-scale solar costs without AC-related costs returns a similar range of $600-$650/kilowatt.

The US also has high panel prices. The global price for a panel today is ~$120/kW, but as high as $300/kW in the US. The difference comes from tariffs and trade barriers, and US prices should move towards the global standard.

LFP battery cell prices were >$120/kWh last year, but in China, they have fallen to ~$60/kWh. The proposed data center battery bank also has a lower power rating than most current reference designs, saving some costs. Soft costs like development, engineering, procurement, and construction are a significant portion of installed costs and should decrease with such a large project. The cost might be ~$250/kWh after considering cell price drops, development synergies, and scale.

Any cost reductions in the solar + battery system cost have leverage on competitiveness because of the savings enabled in the data center. The net system cost falls to ~$300 million in the most aggressive case with the investment tax credit, which is close to the fully loaded cost of a natural gas combined cycle power plant. There is ample opportunity for further solar and battery price decreases, and they should be more significant for off-grid systems than grid-tied ones.

The Coming Shake Out

The path of off-grid data centers is similar to the electric car industry. Putting an electric drive train in a traditional car design does not create a compelling product. Efforts to improve efficiency and remove vestigial features pay off handsomely.

Some efforts, like nuclear-powered data centers, are reminiscent of Toyota's hydrogen push. Incumbents promote zero-emissions technology that minimizes change even if some aspects, like cost or lead times, don't pencil out. Companies attached to current layouts similarly cling to nuclear and geothermal-power data centers.

The risk to incumbents is that they end up in a Toyota Mirai situation navigating utility feet dragging, regulatory delays, technology snags, etc. Competitors might adopt new solar + battery optimized designs and extrude data centers at will in any rural county that prefers paved roads and new football stadiums.

Appendix

from pvlib import pvsystem, modelchain, location, iotools

import pandas as pd

#python3 desktop/scripts/geothermal/pvlibdatacenter.py

# latitude, longitude, name, altitude, timezone

#(32.43, -111.1, 'Tucson', 700, 'Etc/GMT+7')

#(31.9, -106.2, 'El Paso', 1140, 'Etc/GMT+7')

#(32.6, -114.7, 'Yuma', 43, 'Etc/GMT+7')

#{29.22, -98.75, 'San Antonio'}

latitude = 29.22

longitude = -98.75

array_kwargs_tracker = dict(

module_parameters=dict(pdc0=1, gamma_pdc=-0.004),

temperature_model_parameters=dict(a=-3.56, b=-0.075, deltaT=3)

)

pd.set_option('display.max_columns', None)

arrays = [

pvsystem.Array(pvsystem.SingleAxisTrackerMount(),

**array_kwargs_tracker),

]

loc = location.Location(latitude, longitude)

system = pvsystem.PVSystem(arrays=arrays, inverter_parameters=dict(pdc0=1))

mc = modelchain.ModelChain(system, loc, aoi_model='physical', spectral_model='no_loss')

weather = iotools.get_pvgis_tmy(latitude, longitude)[0]

mc.run_model(weather)

ac = mc.results.ac

daily_energy_sum = 0

hour_counter = 0

daily_output = []

for hourly_output in ac[7:-17]:

if hour_counter < 24:

daily_energy_sum += hourly_output

hour_counter += 1

else:

daily_output.append(daily_energy_sum)

daily_energy_sum = 0

hour_counter = 0

cutoff_day = 50

daily_usage = 2400

demand_in_MW = 100

daily_output.sort()

print('Required Solar Array: ', round(daily_usage/(daily_output[0])))

print('Required Solar Array, generators: ', round(daily_usage/(daily_output[cutoff_day:][0])))

print('Fraction handled by generators: ', (sum(daily_output[0:cutoff_day])*demand_